With Lucidchart’s AI Prompt Flow extension you can experiment with LLMs (large language models) and improve AI prompts no matter how technically savvy you are (or are not)! You can use the extension to build models of your AI flow with multiple user inputs using sample interactions to help train responses.

When I was learning how to use AI Prompt Flow it was hard to know where to start or what the possibilities were. The most helpful thing for me was seeing example prompts and outputs. Because of that experience we in the Lucid Community want to encourage you to share all the ways you are using AI Prompt Flow to improve your AI prompts!

To kick us off below are a couple examples I created when experimenting and learning how to use the tool.

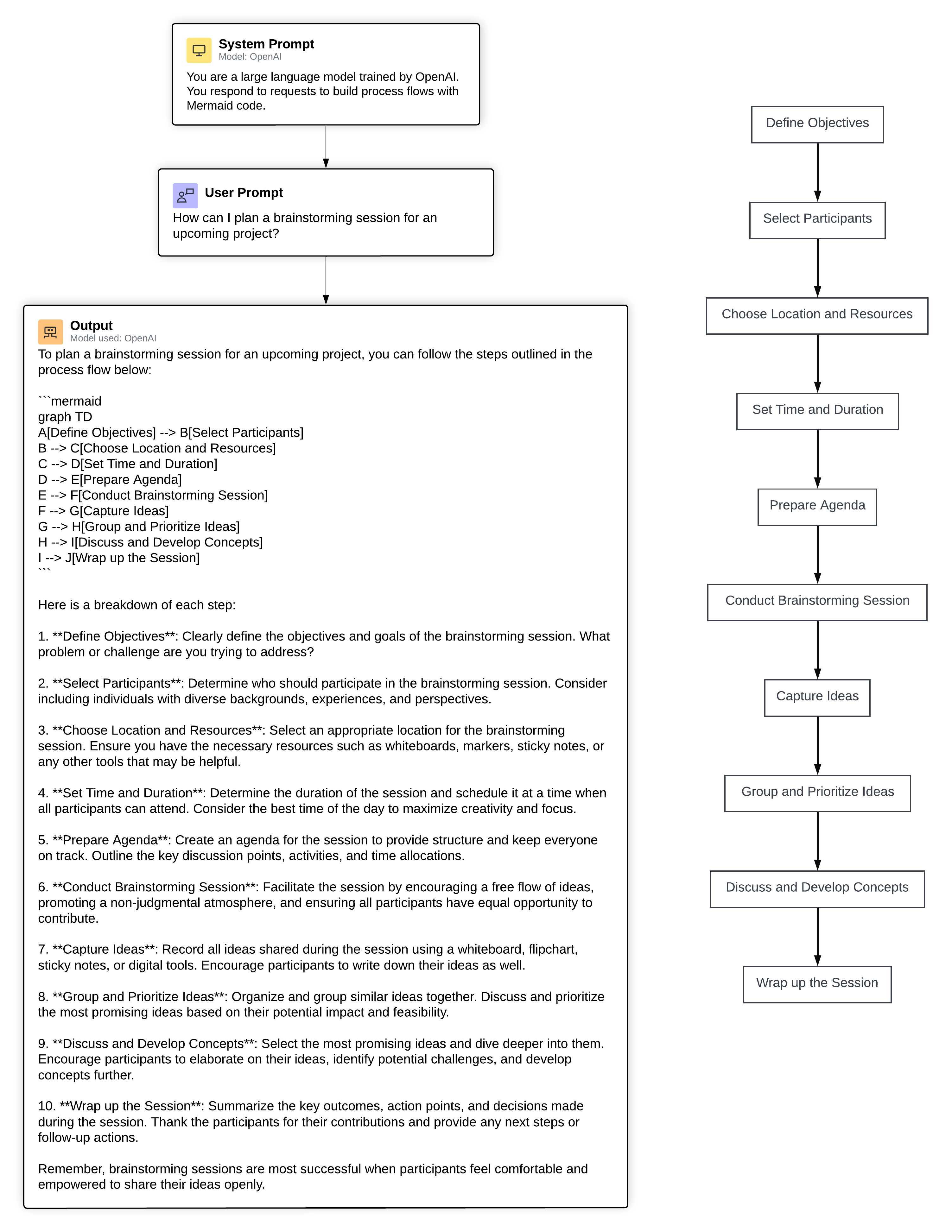

For this first example I used a System Prompt User Prompt and User Variables.

This is the output the AI Prompt Flow created:

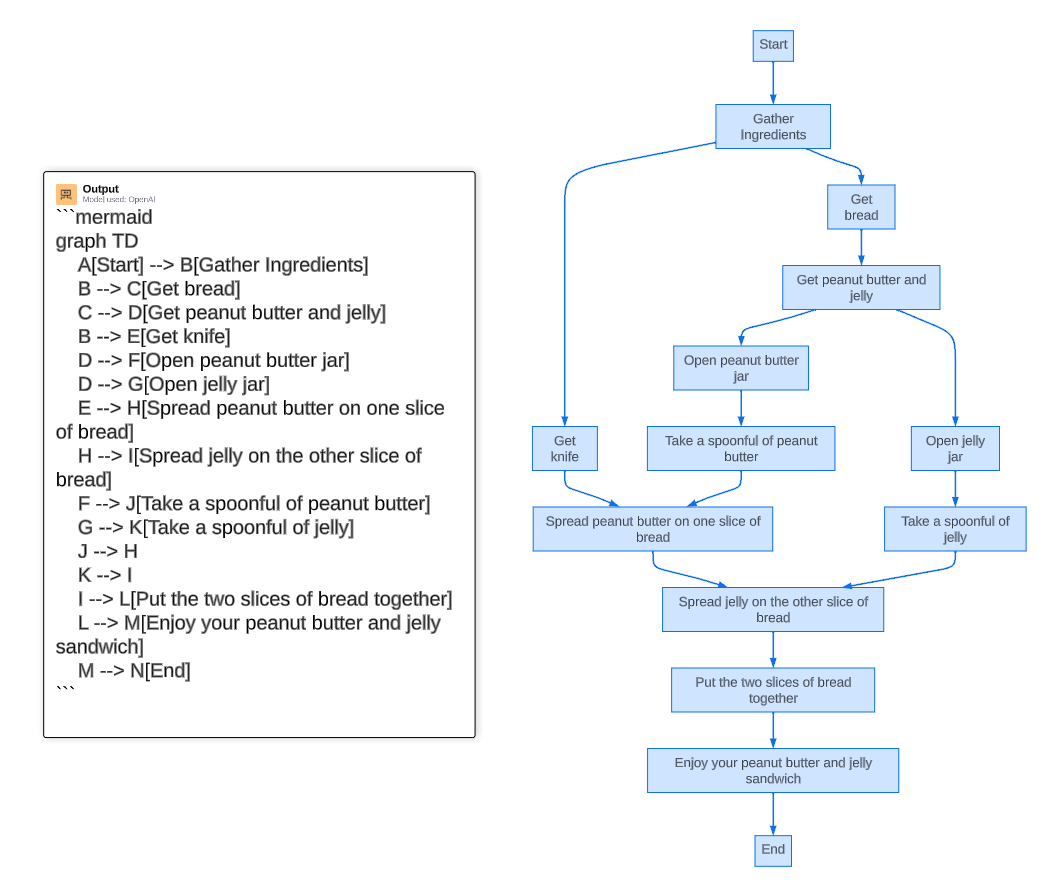

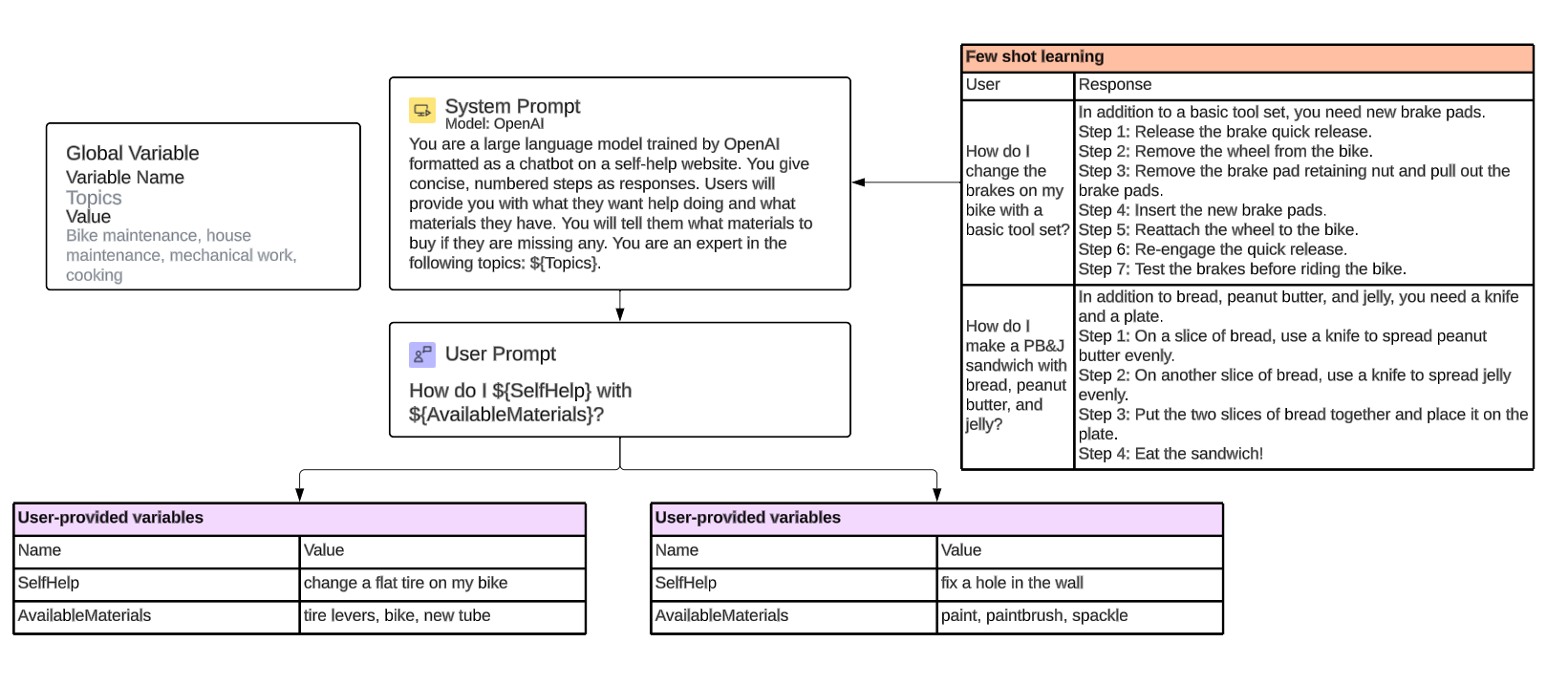

For my second example I wanted to see how all the different parts of an AI model work together so I started with this:

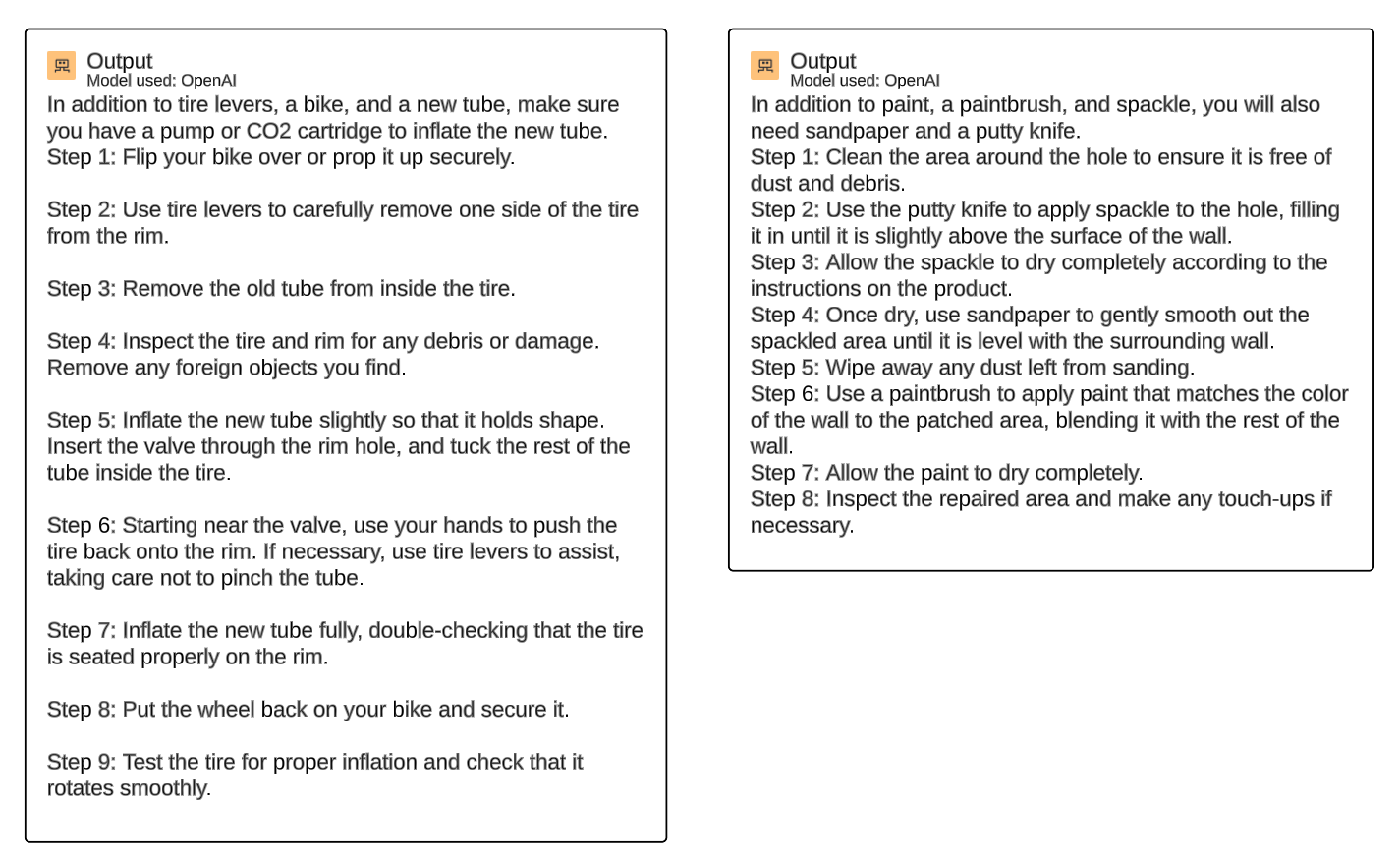

And here is the output the AI Prompt Flow returned:

With this tool the possibilities and ways to improve your AI flows are endless. How are you using the AI Prompt Flow? Post your ideas and examples below!