Hi Lucid Community we are excited to announce a new way for you to increase your efficiency and productivity when working with others in Lucid - Collaborative AI!

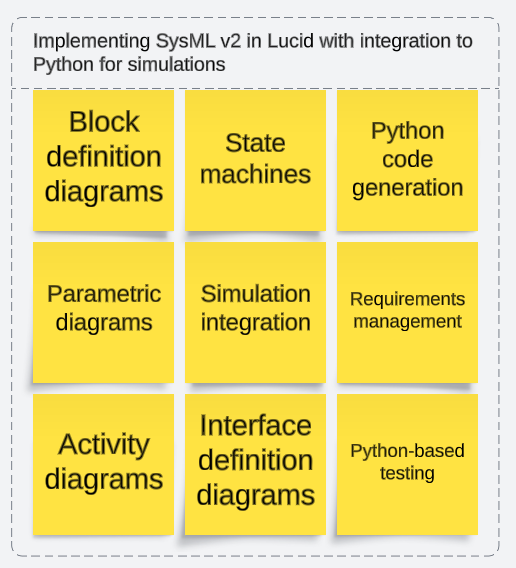

By incorporating artificial intelligence and machine learning capabilities across the Lucid Suite our products will help you unlock the power of divergent thinking be more creative and innovative and get more done in less time. Overall our goal is to provide the following capabilities to your teams with Collaborative AI:

- Unlock the power of divergent thinking: identify patterns and connections foster inclusivity and diversity when problem solving and facilitate collaboration between teammates with different perspectives.

- Supplement your team’s thoughts by sparking additional ideas and trying to cover all angles: leverage vast data to identify unnoticed problems capture a wider range of perspectives and ideas and reduce cognitive bias when identifying key themes.

- Increased productivity and efficiency across teams: automate time-consuming tasks allocate resources effectively and free up time for creativity and innovation.

Our goal is for Collaborative AI to integrate seamlessly into your workflow in these ways and we want to understand what would make this experience powerful and useful for you. Please use this thread to share questions concerns and ideas surrounding AI in Lucid. Here are some conversation starters to get your gears turning:

✨ How comfortable are you with incorporating AI into your workflow?

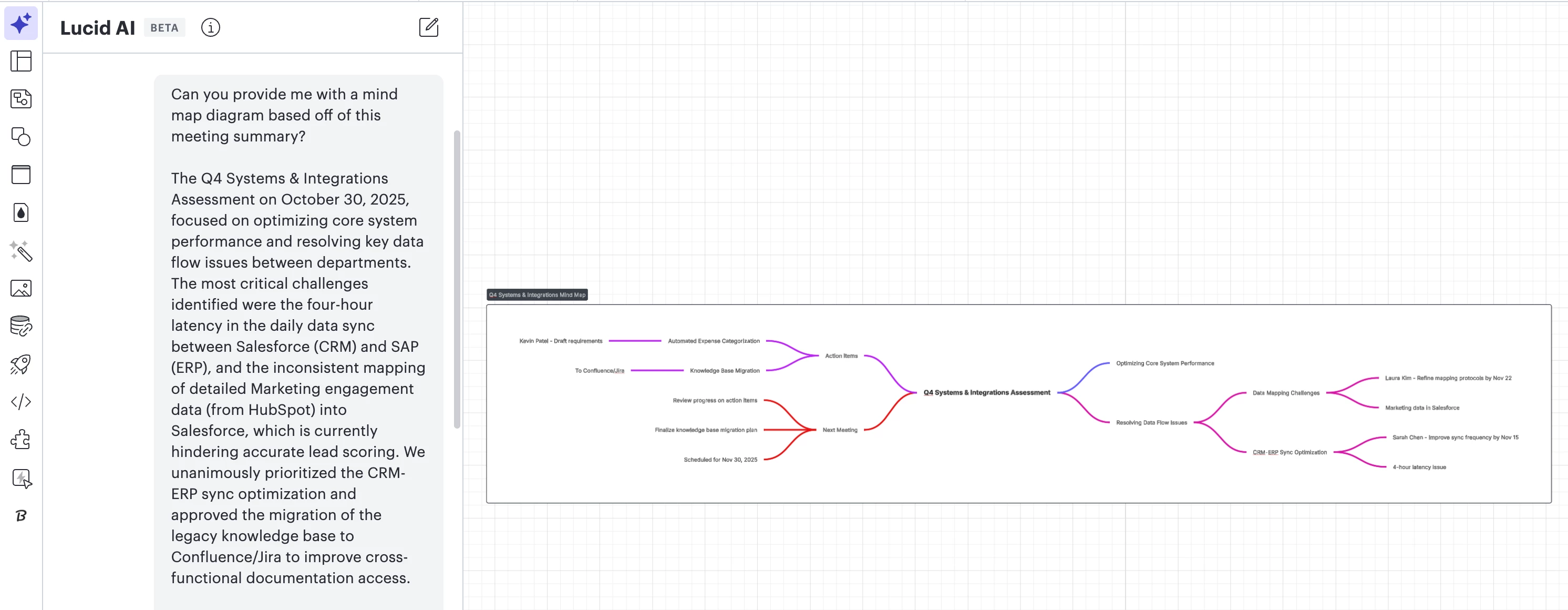

✨ How would you like to use AI within Lucid?

✨ What repetitive tasks do you complete within Lucid that would benefit from automation?

✨ When brainstorming or planning using Lucid what themes patterns or needs would be helpful to identify?

✨ What types of data do you analyze using Lucid?

✨ What would you need or want to have in order to start using AI in your workflows?

✨ Beyond Lucid how do you see AI being impactful within your line of work?

Note: Collaborative AI is currently in beta. Please refer to the Collaborative AI article from the Lucid Help Center for up to date details and functionality information.